-

Kernels and Dot product

Kernels and Deep learning.

-

Operational vs Denotational

The question of language is not functional vs imperative. Rather Operational Semantic vs Denotational Semantic.

-

Reproducing Kernel Hilbert Space explained

Couple of simple yet in-depth resources to learn about Reproducing Kernel Hilbert Spaces (RKHS).

-

Differential Form

idea of differential form has interesting connection with lambda expression’s abstraction and application.

-

Dealing with Unknown

The 2nd paragraph of the Shannon's A Mathematical Theory of Communication paper says:The fundamental problem of communication is that of reproducing at one point either exactly or approximately a message selected at another point. Frequently the messages have meaning; that is they refer to or are correlated according to some system with certain physical or conceptual entities. These semantic aspects of communication are irrelevant to the engineering problem. The significant aspect is that the actual message is one selected from a set of possible messages. The system must be designed to operate for each possible selection, not just the one which will actually be chosen since this is unknown at the time of design.

BACHELIER's The THE THEORY OF SPECULATION paper that started the Finance theory starts off with:The determination of these fluctuations is subject to an infinite number of factors: it is therefore impossible to expect a mathematically exact forecast. Contradictory opinions in regard to these fluctuations are so divided that at the same instant buyers believe the market is rising and sellers that it is falling. Undoubtedly, the Theory of Probability will never be applicable to the movements of quoted prices and the dynamics of the Stock Exchange will never be an exact science. However, it is possible to study mathematically the static state of the market at a given instant, that is to say, to establish the probability law for the price fluctuations that the market admits at this instant. Indeed, while the market does not foresee fluctuations, it considers which of them are more or less probable, and this probability can be evaluated mathematically.

-

Fractional Derivatives and Robot Swarms

An interesting idea... Note to self: read the thesis

This is related to the observation that the intelligent system's interaction is more complicated than simple processes that model the interaction as a random and uncorrelated bouncing of particles (Brownian motion).

Which in turn may be related to

And more on fractional thinking: Fractional Calculus, Delay Dynamics and Networked Control Systems -

Functional Programming and Denotation Semantic

In The Next 700 Programming Languages paper Peter Ladin define the key feature of functional programming. He says:The commonplace expressions of arithmetic and algebra have a certain simplicity that most communications to computers lack. In particular, (a) each expression has a nesting subexpression structure, (b) each subexpression denotes something (usually a number, truth value or numerical function), (c) the thing an expression denotes, i.e., its "value", depends only on the values of its subexpressions, not on other properties of them. It is these properties, and crucially (c), that explains why such expressions are easier to construct and understand. Thus it is (c) that lies behind the evolutionary trend towards "bigger righthand sides" in place of strings of small, explicitly sequenced assignments and jumps. When faced with a new notation that borrows the functional appearance of everyday algebra, it is (c) that gives us a test for whether the notation is genuinely functional or merely masquerading.

It all starts with a functional language. Following lecture shows Denotational Semantic of an expression in Lambda Calculus to Set.

Once the meaning of an expression is knowns we need the meaning of the values that the expression reduces to. This is where as a designer you define the meaning of the values in your specific domain.

In the following lecture, Conal Elliot shows how you extend the denotational semantic to values. He contrasts this approach to the standard imperative API model. In imperative API the interface is about creating the state, initializing it and defining functions to side effect the state.

Functional programming, on the other hand, is about APIs to define things that "ARE" in the domain. There is a clear distinction between values and evaluation. So you have base values, transformation, and composition operators. In all, you need to make sure there is a clear, and precise meaning to the values and that it can lead to an efficient implementation. The distinction is sometimes hard to get. For me an analogy is SQL. An SQL expression defines that property of the result your are interested in, the database engine then turns that into an execution plan and evaluates it to give you the results.

The key take away from the talk is that it is not about Functional vs Imperative or if a particular language does or doesn't have lambda expression. It is about Denotational vs Operational semantic. Another word, do you think about your program in term of its execution model or in term of the meaning of the expressions.

An interesting concept that shows up in Conal's work is that the semantic function is a natural transformation. Bartosz Milewski does a nice job of explaining what the natural transformation is. -

In Opening Black Box Of Deep Neural

The Opening the Black Box of Deep Neural Networks via Information uses the diffusion process and heat equation as intuition for gradient flow between the layers of the deep network. I needed a refresher on the underlying math. Found some very good sources:

In "What is Laplacian" and subsequent video you get a great insightful introduction of Laplacian, heat and wave equations.

In the applied math section which continues to "Fourier and Laplace Transformation" section of the differential equation lecture series, there is also a shorter explanation of diffusion.

There is also stochastic nature to the whole thing that is nicely covered in the Stochastic process, Ito calculus , and stochastic differential equation section of the Topics in Mathematics with Applications in Finance lectures with Notes and Vidoes. -

From General Solution to Specific

One of the most important skills in mathematical problem solving is the ability to generalize. A given problem, such as the integral we just computed, may appear to be intractable on its own. However, by stepping back and considering the problem not in isolation but as an individual member of an entire class of related problems, we can discern facts that were previously hidden from us. Trying to compute the integral for the particular value a = 1 was too difficult, so instead we calculated the value of the integral for every possibly value of a. Paradoxically, there are many situations where it is actually easier to solve a general problem than it is to solve a specific.

Richard Feynman’s integral trick -

Communication and Learning

The fundamental problem of communication is that of reproducing at one point either exactly or approximately a message selected at another point. Frequently the messages have meaning; that is they refer to or are correlated according to some system with certain physical or conceptual entities. These semantic aspects of communication are irrelevant to the engineering problem. The significant aspect is that the actual message is one selected from a set of possible messages. The system must be designed to operate for each possible selection, not just the one which will actually be chosen since this is unknown at the time of design.

A Mathematical Theory of Communication -

Hamming on Learning, Information Theory....

An interesting set of lectures by Hamming of Hamming code fame -

Bottleneck, Deep learning and ML theory.

The Anatomize deep learning with information theory is a nice summary of the bottleneck theory and deep learning talk by Professor Tishby. The blog also has a nice reference to a two part write up on traditional learning theory that doesn't actually explain the success the deep learning: Tutorial on ML theory, part1and part 2.

Update: A really interesting article on To Remember, the Brain Must Actively Forget This is very similar to the idea of the bottleneck theory and deep learning models. -

Polymorphism and Design Pattern Haskell Style

The following short demonstration does a nice job capturing parametric polymorphism and design patterns in Haskell.

Domain Modelling with Haskell: Generalizing with Foldable and Traversable

It is easy to see polymorphism in a list. You can have a list of people, and you map a function over them to get their names. Now you have a list of strings, or Maybe Strings for the names of each person on the list. So the list went from List of People to List of Maybe Strings.

This a bit more challenging to see in your own data types. In the video above the data type for Project is modified to use a polymorphic parameter "a" instead of fixed project id. This allows for the project to maintain its structure (the constructors) as functions are applied to the subelements of the structure. This is just like what happens in the list but it is a bit harder to see if you are accustomed to generics in the OO sense.

The notion of design pattern is also interesting in this lecture. There are two main issues with the traditional GOF design pattern. First, is that if there is a pattern why isn't it implemented in code once and reused everywhere. Second, patterns are not in the design but in forms of computation. In this video, you can see the computational patterns Functor, Foldable and Traversable are used in the computation. More importantly, as they are patterns it can be automatically derived. -

Regression

I like this series of short lectures on Regression.

Statistical Regression -

Why you cant just skip Deep Learning.

An interesting post: Dear Aspiring Data Scientists, Just Skip Deep Learning (For Now).

Hardly a day goes by that I don't hear the same sentiment expressed in one way or another. The problem is that they are correct and wrong at the same time.

The problem is terminology. Let's use the terminology defined in What's the difference between data science, machine learning, and artificial intelligence?

So far as you are looking at Data Science and Machine Learning (and your focus is to be hired) that is insight and prediction, then the article is valid. If your goal is a prediction, then why not use the simplest method, it is easier to train and generalize. Furthermore, they are correct in that training for image recognition, voice processing, computer vision... you need a massive amount of data and processing power. This is not where most DS/ML jobs are.

The problem they are missing, again going back to the definition above, is prediction vs action. So long as the goal of DS/ML is to gather insight and prediction for humans then arguments are valid. It is when you want to take an action that it all falls apart. Humans can potentially apply their domain knowledge and navigate the predictions to find the optimum action.

But for machines, it is very different. You basically have three choices to determine the best action, apply human-derived rules to the predictions (1980s AI), reduce the problem to an optimization program (linear or convex), or essentially use reinforcement learning to derive a policy to deal with the uncertainty of your prediction. This is I think the essence of Software 2.0 or what I like to call Training Driven Development (TrDD) -- More on this later.

If rules and/or optimization works then great you are done. But when that is not an option, then in the model prescribed by the article, you need to combine a policy neural network with your ML prediction. The problem now is that you have two islands to deal with, your object loss function's gradient from neural network can't propagate to your ML prediction. Simplifications that worked so well for ML prediction for humans now are being amplified as errors in your policy network. I don't know how you can have a loss function that can train the policy and communicate with the say a linear regression model's loss function.

After reading Optimizing things in the USSR I ordered my Red Plenty book. It has some interesting observation as to what happens when you "simplify assumptions". -

Information Theory of Deep Learning. Bottleneck theory

The Information Bottleneck Method by Tishby, Pereira, and Bialek is an interesting way to look at what is happening in a deep neural network. You can see the concept explained in following papers- Deep Learning and the Information Bottleneck Principle , and

- ON THE INFORMATION BOTTLENECK THEORY OF DEEP LEARNING

Professor Tishby also does a nice lecture on the topic in

Information Theory of Deep Learning. Naftali Tishby

With a follow-on alk with more detail:

Information Theory of Deep Learning - Naftali Tishby

Deep learning aside, there are other interesting applications of the bottleneck method. It can be used to categorize music chords:

Information bottleneck web applet tutorial for categorizing music chords

and in this talk, the method is used to quantify prediction in the brain

Stephanie Palmer: "Information bottleneck approaches to quantifying prediction in the brain"

I found the following talk also interesting simplified version of the concept applied to deterministic mappings:

The Deterministic Information Bottleneck -

Visualization

Nice visualization of Hilbert Curves and Linear Algebra.

I like this comment by "matoma" in Abstract Vector Space:

Furthermore, why does the exponential function appear everywhere in math? One reason is that it (and all scalar multiples) is an eigenfunction of the differential operator. Same deal for sines and cosines with the second-derivative operator (eigenvalue=-1).

Noting the definition of Eigenfunction -

Data science, machine learning, and artificial intelligence....

I like this explanation:

What's the difference between data science, machine learning, and artificial intelligence? -

Affine Transformation

Nice visuals on Affine Matrix Transformation -

Nice Lecture series on Causality

In Reinforcement Learning you need to define functions for rewards and state transitions. Big data is an good source of modeling external actors to a deep learning system. What is needed is a causality model for the data to be able to simulate the external world correctly.

This set of lectures are quick introduction to the field.

part 1

part 2

part 3

part 4 -

On back propagation algorithm

Nice detailed explanation of back propagation in neural networks:- A nice post using straight python

- Couple of nice post using numpy basic neural network and RNN.

- MIT class notes

- Graph view of the gradient. The "Computational Victories" has good explanation of why back propagation is the preferred method of gradient calculation.

-

DO YOU KNOW HOW MUCH YOUR COMPUTER CAN DO IN A SECOND?

Let find out.... -

Encoding Computation.

https://www.youtube.com/watch?v=eis11j_iGMs -

Calculus and Algebra

I thin this video capture the essence of calculus.

"We have a geometric concept, and we want an algebraic expression for it".

It could be: we have XYZ concept, and want and algebraic expression for it.

An XYZ calculus (such as Tensor calculus in case of Geometry, differential calculus in case of algebra, Relational Calculus in case of Relational Algebra, Lambda Calculus in case of Function algebra...) is used to write an algebraic expression which is then solved with algebraic laws to reason.

The method seems to always be "Write down the correct identity"... Struggling to find how this applies to Lambda Calculus.. -

Nice talk on Functional Programming

I enjoyed the simplicity and clarity of this talk

Managing Complexity, Functionally -

"logic models provability rather than truth"

Nice post on Type System and Logic. -

Adventures with types

Another excellent talk about types in Haskell by Simon Peyton Jones. -

Making DSL Fly

I like this presentation on DSL and its performance characteristics in Haskell. -

Oregon Summer School

These are amazing set of lectures on computer programming.

2012 Oregon Summer School -

Interesting talk about use of Haskell in the wild

Interesting talks on use of Haskell in Barclays for mission critical applications.

Haskell at Barclays: Exotic Tools for Exotic Trades

The section about Generic programming using Catamorphisms and Sharing of computations is quite interesting. I also like the idea of Barclays first developed an EDSL then a DSL that it compiled to the EDSL. Having a DSL allows you to give application specific error messages that would be difficult when you have EDSL in a mother language (in this case Haskell). I also liked their mention of the UUParsing library out of Utrecht. There is an interesting tutorial (and class notes) on the parser that I have been studying and find it very useful in trying to learn functional thinking and design. -

Understanding and Using Regular Expressions

Nice Presentation on Regular Expression

Understanding and Using Regular Expressions -

Bayesian framwork

Interesting application of bayesian framework. Bayesian framework is interesting in context of functional programming, as it too, is all about compositional frame work. Building a complex probabilistic model from observations, uncertainty about observations, and prior beliefs.

Search for the Wreckage of Air France Flight AF 447 -

You're up and running! WOOHOO

Next you can update your site name, avatar and other options using the _config.yml file in the root of your repository (shown below).

The easiest way to make your first post is to edit this one. Go into /_posts/ and update the Hello World markdown file. For more instructions head over to the Jekyll Now repository on GitHub.

-

What is meaning of denotation semantic ....

An absolute not to be missed lecture by David Sankel: The intellectual Ascent to Agda.

He talks about Denotational Semantic and interestingly describing it as: augmenting math to talk about meaning and then extend math to program in math. -

Origins of Functional Programming

A very nice talk on origins of functional programming. -

Imagination and Math

There’s Enough Math in Finance Already. What’s Missing is Imagination.

Emanuel Derman -

Dedication!

In "The Lady Tasting Tea" the author (David Salsburg) talks about calculations done in a Statistical paper published in 1920s by R. A Fisher. Mind blowing to think that he used this machine to do the tables and charts in the paper:

The author estimates it must have taken him 185 hours to prepare the tables. -

Interesting use of probabilistic model

In these lecture the presenter describes a system of differential equation that is solved for a set of initial condition. The results are used to build a probabilistic model, using the distribution of values and the probability model for state transitions in a dynamic model. The dynamic network is then used to make inference on the system directly with out needing to solve the differential equation.

An interesting side note is that with deterministic differential equation describing a system (in this case a biological system) our observation of the state of the system is noisy and infrequent (once every 30 minutes). Further justifying the probabilistic approach over a deterministic approach. -

Mechanical Automaton

A mechanical view of coding. The Writer Automaton -

Monads vs Objects

The examples in documentation of MonadRandom does a good job on how monads are useful. I have it cut and pasted here:There is some correspondence between notions in programming and in mathematics:

random generator ~ random variable / probabilistic experiment result of a random generator ~ outcome of a probabilistic experiment Thus the signature

rx :: (MonadRandom m, Random a) => m a

can be considered as "rx is a random variable". In the do-notation the line

x <- rx

means that "x is an outcome of rx".

In a language without higher order functions and using a random generator "function" it is not possible to work with random variables, it is only possible to compute with outcomes, e.g.

rand()+rand(). In a language where random generators are implemented as objects, computing with random variables is possible but still cumbersome [as you need to wrap the generators (Composition Pattern) in object and perform the computation as methods of the new object.]In Haskell we have both options either computing with outcomes

do x <- rx

y <- ry

return (x+y)or computing with random variables

liftM2 (+) rx ry

This means that liftM like functions convert ordinary arithmetic into random variable arithmetic [Since you can't do lift in OO, you would need to write your own version of each operation you like to support]. But there is also some arithmetic on random variables which can not be performed on outcomes [Or would be adhoc in OO]. For example, given a function that repeats an action until the result fulfills a certain property (I wonder if there is already something of this kind in the standard libraries)

untilM :: Monad m => (a -> Bool) -> m a -> m a

untilM p m = do x <- m

if p x then return x else untilM p mwe can suppress certain outcomes of an experiment. E.g. if

getRandomR (-10,10)is a uniformly distributed random variable between −10 and 10, then

untilM (0/=) (getRandomR (-10,10))is a random variable with a uniform distribution of {−10, …, −1, 1, …, 10}. -

Gaussian Process

A really nice tutorial lecture on Gaussian Process. -

Computational Oriented Programming instead of Functional Programming.

IMHO, the term "functional programming" doesn't do justice to the concept that lies behind it. I like to think of it as Computational Oriented Programming.

The idea is that you have computations (e.g. state-full computation, non-deterministic, or reactive computation). Each computation is a polymorphic type. You think of your computations as having:- Constructors

- Morphism

- Observers

Constructors are either when you build a new computation from external types (e.g. putting an integer in a non-deterministic computation -list), or they are higher level combinators that combine two or more of your computation type to a new instance of your computation (concatenating two lists) . Morphisms are when you apply a function to the values in your computation (add one to every element of the list). End result is the same computation but the values have been morphed. The key point in constructors is that the end result is the same computation type that you started with.If you always stay within your computation there is no value to the outside world. So you need observers to export the result of your computation to other forms of computations. Caveat: the term observers here is not the same as the observers that is used in some of the FRP implementation.So when you think of say Functional Reactive Programming you start thinking in a about the constructors and combinators that you would need to express an event processing. Your goal here is to define all the ways one can possibly construct an event handler. A key point in your design must be to define the algebra of combining the FRP computation constructors. The algebra would ensure that any complex combination of the constructors and morphism on the type would be coherent and consistent, otherwise you have a problem in your hand and you need to go back to the drawing board. Once the constructors and combinators are defined a user would be able to define the event handlers. Next you need to define the ways the user would be able to get the result of event handler computations to the outside world. When you are done you have created a new type of computation that due to its polymorphic nature can be used in variety of contexts. That in the essence how you do functional designs. -

Functional Talks

A nice blog on interesting collection of functional talks.

Check them out here -

Even more reasons not to use Scala

In a response to my post to Quora, where I recommended against use of Scala for any serious project, a reader posted this:Let's say you have three functions (f,g, and h) that receive an integer and performs an asynchronous computation, returning Future of Int and you need to chain the three functions together (use the result of one computation as input to the next one). In Scala you'll do:

f(x).flatMap(g(_).flatMap(h(_)))

or with the "for" notation:

for {

i <- f(x)

ii <- g(i)

iii <-h(ii)

} yield iii

What appears to be a cleaver (or "cute") use of monadic composition is actually seems to be completely misleading. A closer look at the "flatMap" implementation in Future shows that:def flatMap[S](f: T => Future[S])(implicit executor: ExecutionContext): Future[S] = {

val p = Promise[S]()

onComplete {

case f: Failure[_] => p complete f.asInstanceOf[Failure[S]]

case Success(v) =>

try {

f(v).onComplete({

case f: Failure[_] => p complete f.asInstanceOf[Failure[S]]

case Success(v) => p success v

})(internalExecutor)

} catch {

case NonFatal(t) => p failure t

}

}(executor)

p.future

}

Another word, your set of futures are no longer running independently and asynchronously (as you would expect in Future) they are composed sequentially in a single Future. If that was your original goal, then you should have composed the functions (in this case f, g, h) and run them in a Future. On the other hand when you do:f(x).flatMap(g(_).flatMap

you must be thinking that the functions are running in parallel and working on each others output. But it seems (reading the code and not actually done a test, as I don't have the Scala dev environment handy) to me that "g" would not run at all until "f" is finished. Again not what you would expect when you are using Futures.(h(_))) -

Bayesian Learning Lectures

A set of very informative, not to be missed, lectures on the Bayesian learning by Dr Draper.

Bayesian Modeling, Inference, Prediction and Decision-Making -

What is "high level" or "low level" or "functional"?

......what does it mean for one library to be more "high level" or "low

level" or "functional" than another? On what basis should we make such

comparisons? A pithy answer is given by Perlis:A programming language is low level when its programs require attention

But how should we decide what is relevant?

to the irrelevant.

Alan PerlisWithin the functional programming community, there is a strong historical

connection between functional programming and formal modeling.

Many authors have expressed the view that functional programming languages

are "high level" because they allow programs to be written in terms of an

abstract conceptual model of the problem domain, without undue concern for

implementation details.Of course, although functional languages can be used in this "high level"

way, this is neither a requirement nor a guarantee. It is very easy to write

programs and libraries in pure Haskell that are littered with implementation

details and bear little or no resemblance to any abstract conceptual model of

the problem they were written to solve

Source Genuinely Functional User Interfaces -

Meaning of NOSql and BigData for Software Engineers

The Term NoSql as is No SQL doesn't convey the real meaning of the concept behind NOSql. After-all SQL stands for Standard Query Language. It is a language of querying relations which is based on Relational Algebra and Relational Calculus. It is a query language has no bearing on the kind of processing that NoSql implies. In fact you can use SQL to query your “NoSql/BigData” as in Hive.The Term NoSql as “Not Just SQL” is closer to the meaning implied by NoSQL but still doesn't really convey what the NoSQL is all about. It is not about what language you use to query your data.Big Data is also not really meaningful. Sure the size of data might be large but you can have NoSQL problem with small data (at least in today's relative terms).IMHO, NoSQL intends to say your data is not ACID. ACID as in Atomic, Consistent, Isolated, and Durable has been the corner stone of the transactional databases. In "NoSql" you are dealing persisted data without strict grantees on its Atomicity, Consistency, Isolation, and/or Durability. Another word you have noisy data with duplication, inconsistency, loss. The goal of NoSql is to develop software that can work with such data. Even if you have small size but noisy data, the standard algorithms that work on ACID data would not yield useful results. To them non-ACID data is garbage and you endup with garbage-in-garbage-out dilema.A better way to think of NoSql is to think of problem of inference about the underlying model in the data, prediction on future data, and/or making decisions all using the noisy data (of any size). That is the problem that has been address in the statistics community as Bayesian analysis. The challenge for software developers tackling NoSql/Big Data problems is to understand and incorporate statistical analysis in their application. A good place to start on this are an excellent encyclopedic write up by Professor David Drapper's Bayesian Statistics or his priceless in-depth lectures on the topic Bayesian Modeling, Inference, Prediction and Decision-Making. -

When to use Applicative vs Monad

A nice rule of as to when you must use Monad and when you can use Applicative style:

How do you use Control.Applicative to write cleaner Haskell?

Update: May be another way to look at this is to think of >>= as combinator that applies the mapping function then flattens the result of application of (a -> mb), and (in some implementations) change the computation based on the value of the "a". In computations where the >>= is only flattening the types, it can be converted to an Applicative computation. -

Adding numbers as digits in a list

In a phone interview with an un-named company :) I was asked to come up with solution for hypothetical adder where input data are integers but are given as list of single digits. For instance 345 would be [3,4,5]. Problem is to write an adder for the numbers. I kind of like the simplicity of the solution in Haskell ( as oppose to my java-like doodle in the interview).

You can find my code Here

-

Refreshing my statistics

I am watching a nice set of lectures of Harvard's Stat 110 class.

Watch the Lectures

Professor Joe Blitzstein is great. One particularly puzzling problem is presented at 36:00 minute of Stat 123 teaser.

I have not been able to figure out the puzzle in the expected value of Euro and $. If you happen to have any ideas on this I would appreciate it. -

Yet another good use of delimited continuation

Scope herding with delimited continuationsOne confusing aspect to the continuation discussion is that in Scheme (where continuation got its start) future of computation is what is to the left of the shift. For instance in (reset (foo (bar ( shift....)))) the bar and foo functions are the rest of the computation after the shift (thus the context that is passed to the shift function). Whereas in Haskell's do notation we have

shift $ do

.....

shift ( some function here )

bar

foo

Here the rest of the continuation (the bar, and foo) are what follows the shift to the end of the do block. Seems more intuitive, doesn't it?

-

Tutorial on Continuation and Delimited Continuation

A nice tutorial on Continuation and delimited Continuation here. -

I Like This Post On What Monadic

I like this post on what monadic parsers are and where they are useful.

This exchange further explains when you need monadic power.....use the simplest tool which gets the job done. Don't use a fork lift when a dolly will do. Don't use a table saw to cut out coupons. Don't write code in IO when it could be pure. Keep it simple.

But sometimes, you need the extra power of Monad. A sure sign of this is when you need to change the course of the computation based on what has been computed so far. In parsing terms, this means determining how to parse what comes next based on what has been parsed so far; in other words you can construct context-sensitive grammars this way. -

Usecase and Aspect

Aspect programming feels to me like adding functional thinking in OO. An article from a few years ago that sounds interesting

http://www.jot.fm/issues/issue_2003_07/column1.pdf and slides http://aosd.net/archive/2003/jacobson-aosd-2003.ppt -

An interesting talk... Riak Core: Dynamo Building Blocks

InfoQ: Riak Core: Dynamo Building Blocks -

Logic Book online

An interesting logic book online. Logic in Action -

Good examples on Arrows

I like this examples of Arrows. -

Finding largest rectangular area without trees

In an interview I was asked a question very similar to this or this.

In my version there is a rectangular piece of land with trees on it. We know the coordinates of the land and the location of the trees on the land. Goal is to find the largest contiguous rectangular area within our land that doesn't contain any trees.

Solving these problems require you to develop the properties of the problem that then you can base an algorithm on for which there is no time. My response in the interview was what I consider brute force method of trying to take a square and see how big it can grow. Then try to find the largest one.

But thinking about the problem more I said what if I had a land and one tree. Then I get essentially 4 areas. Now if I have two trees, I should be able to take the areas from the first tree and check to see if the 2nd tree would influence them. If the 2nd tree is inside an area, then it would give me potentially 4 areas to work with. That is the one area is replaced with up to 4 (removing the original one). If there are no tree in the area then the area is unaffected by the 2nd tree. So now I can try the 3rd, 4th... tree. Each time check the effect of the tree on the land. At the end the one with the largest area is my answer. This solution doesn't care about the size of the land, just the number of trees.

Here is what I wrote in Haskell that I think solves the problem. The implementation takes advantage of foldM library to do most of the work. In foldM signature, "a" is my land, "b" is the tree, and "ma" is List of a which is a list of land pieces. Any feedback is appreciated. -

How glues make a difference

There are folks that say programming language does matter. Some compare it to glue that allow you freedom to build parts from smaller part. It was hard for me to appreciate this until I saw real examples. What I have learned is that with right glue your imagination can be liberated to think about possibilities and that is what make the choice of language matter.

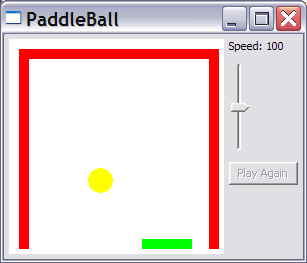

Lets take the problem of a simple game. Let say you want to write code to implement the paddle ball.

If you think Object Oriented about this game. You see Ball, Paddle, Wall, Score..... OO techniques would give you a great way to organize your code. All the code concerning Ball would be encapsulate in the Ball object. Then the key design decision you need to make for the Ball, Paddle and Wall objects is how to detect collision between Ball and Paddle or Wall. Then figure out who does the bounce of the ball. Is the ball object going to detect the bounce, is the wall telling the ball to bounce... So you put on your "Object Think" hat on. Is it the ball that is detecting the collision, the wall? Hmmmm "Trying to Object Think and nothing is happening. You give up, may be the Collision Manager that watches over all objects is simpler to think about! Object Think => Let manager decide :) You also need to worry about user input controlling the Paddle. Is the code event driven (Inversion of Control) or is it polling for the inputs....

However you decide to slice the implementation among your object, one thing is going to be true. You are only thinking about the objects in the paddle game. At best you may be able to reuse your ideas in similar game. But the design, if you call what you are doing here as design, is adhoc and has very limited potential.

If you step back and drop your OO thinking and start looking at problem differently you be surprised at how all games are the same and hence can be implemented on common model.

If you think of the game as a computation instead of bunch of object then it all looks different. You ask yourself what is nature of the computation in this game? One possible answer is to see the game as Interactive (user controls the paddle via key inputs) Animation (graphics that changes over time). You can further define Animation as time-series (functions of time) graphics. So now you have three different concepts. Interactive, Time-Series, and Graphics. Sum of them would give you the language to express the game while each one of those ideas can be viewed by itself. What would Interactive computation need to have? What would Time-Series computation need? and finally what would Graphics packages need to have to be fully expressive. In all three cases you are asking yourself the compositional needs of each of your concern and define a language for it. For instance, on the graphics you will define a language to have simple shapes, complex shapes as and/or/xor of simple shapes, transposition of shapes..... Most likely you will find an existing library that defines appropriate DSL for each of the concerns of our game.

Ideally you want a programming language that allows you to compose notions (in this case interactive, animation, and graphics) in one grand notion such that you can express your game in. So you say key x causes mouse to go left, or right, ball travels at some velocity (speed and direction), when the ball hits wall or paddle it bounces ( you defined a bounce as change in the direction of velocity). If ball passes the edge it is outside, game is over.. You are done. It is all basically the description of the game. Even better, you can reuse the same notions and may be describe the car racing game. If the approach interest you, you can get more details in SOE.

All of sudden in the new perspective you have something that is truly re-usable. You may be able to implement this in Java too. But the key idea, as we saw here, is that the standard OO techniques leads you to a dead end, you are worrying about non issues and the result has limited potential. Furthermore, where your native language doesn't give you the compositional capabilities you need, you be forced to try to figure out ways to implement them. It may be verbose, ugly and hard to understand which is why most people end up with mainstream usage model of the language.

So that is how the language does matter. Glues make it all possible to build powerful abstraction. -

Wiggle room to exploit alternate representations and implementations.

A nice presentation on Parallel programming.

My Favorit slide:Algebraic Properties Are Important!

• Associative: grouping doesn’t matter!

• Commutative: order doesn’t matter!

• Idempotent: duplicates don’t matter!

• Identity: this value doesn’t matter!

• Zero: other values don’t matter!

Invariants give the implementation wiggle room, that is, the

freedom to exploit alternate representations and implmentations.

In particular, associativity gives implementations the necessary

wiggle room to use parallelism—or not—as resources dictate.

My favorite comment was about the C language having the address of operator (pointers). Whereas a Java program has no such thing and thus JVM can garbage collect knowing that the program is not depending on the memory location as it may have been in C. By giving up the address of operator, the JVM has the wiggle room to implement the garbage collection.

Abstractions are made based on the properties (aka "wiggle room") of the underlying concept. -

WOW! my Java code sample from 96

Found my code back in an Java article on 03/01/96! -

Functional programming in hardware..

On the tangible programming there is also the Conal Elliot talk that shows the same idea in Haskell -

Insight into continuation and Haskell

A really nice introduction to continuation.

The talk covers Scheme's implementation of Continuation. What is interesting to note is that in language like Scheme, or Java to implement continuation you need hooks in the native language. For instance, in this interview Bill Venners tries to explain the Java CM changes that are required to capture the continuation.

But if you look at Haskell version of continuation, you realize that there are no changes in the underlying language. Continuation, just like Exception handling is implemented as a library (Control.Monad.Control.ContT). Even delimited continuation can be done as a library: MonadCont or CC-delcont. -

Nice talk....

The "Monadologie: Professional Help for Type Anxiety" talk by Chris League does nice job explaining:

* CPS and Delimited Continuation

* Application of Continuation to Parsing

* State monad application to the tree injection.Monadologie: Professional Help for Type Anxiety from Nathan Hamblen on Vimeo.

-

Amazing discussion in 1966!

At the end of the Peter Ladin's "The next 700 programming languages" There is a very interesting discussion between giants (Strachey, Landin, Smith, Young, and Abrahams) of the software engineering. A definite must read...Strachey: I should like to intervene now and try to initiate a slightly more general discussion on declarative or descriptive languages and to try to clear up some points about which there is considerable confusion. I have called the objects I am trying to discuss DLs because I don't quite know what they are. Here are some questions concerning ])Ls: (1) What are DLs? (2) What is their relationship to imperative languages? (3) Why do we need DLs? (4) How can we use them to program? (5) How can we implement them? (6) How can we do this efficiently? (7) Should we mix l)Ls with imperative languages?

It seems to me that what I mean by DLs is not exactly what other people mean. I mean, roughly, languages which do not contain assignment statements or jumps. This is, as a matter of fact, not a very clear distinction because you can always disguise the assignments and the jumps, for that matter, inside other statement forms which make them look different. The important characteristic of DLs is that it is possible to produce equivalence relations, particularly the rule for substitution which Peter Landin describes as (~) in his paper. That equivalence relation, which appears to be essential in alost every proof, does not hold if you allow assignment statements. The great advantage then of l)Ls is that they give you some hope of proving the equivalence of program transformations and to begin to have a calculus for combining and manipulating them, which at the moment we haven't got.

I suggest that an answer to the second question is that DLs form a subset of all languages. They are an interesting subset, but one which is inconvenient to use unless you are used to it. We need them because at the moment we don't know how to construct proofs with languages which include imperatives and jumps.

How should we use them to program? I think this is a matter of learning a new programming technique. I am not convinced that all problems are amenable to programming in DLs but I am not convinced that there are any which are not either; I preserve an open mind on this point. It is perfectly true that in the process of rewriting programs to avoid labels and jumps, you've gone half the way towards going into 1)Ls. When you have also avoided assignment statements, you've gone the rest of the way. With many problems yeu can, in fact, go the whole way. LisP has no assignment statements and it is remarkable what you can do with pure Lisp if you try. If you think of it in terms of the implementations that we know about, the result is generally intolerably inefficient--but then that is where we come to the later questions.

How do we implement them? There have not been many attempts to implement DLs efficiently, I think. Obviously, it can be done fairly straightforwardly by an interpretive method, but this is very slow. Methods which compile a runable program run into a lot of very interesting problems. It can be done, because DLs are a subset of ordinary programming languages; any programming language which has sufficient capabilities can cope with them. There are problems, however: we need entities whose value is a function--not the application of a function but a function--and these involve some problems.

How to implement efficiently is another very interesting and difficult problem. It means, I think, recognizing certain subsets and transforming them from, say, recursions into loops. This can certainly be done even if they have been written iu terms of recursions and not, as Peter Landin suggested, in terms of already transformed functions like iterate or while.

I think the last question, "Should DLs be nIixed with imperative languages?", clearly has the answer that they should, because at the moment we don't know how to do everything in pure DLs. If you mix declarative and imperative features like this, you may get an apparently large programming language, but the important thing is that it should be simple and easy to define a function. Any language which by mere chance of the way it is written makes it extremely difficult to write compositions of functions and very easy to write sequences of commands will, of course, in an obvious psychological way, hinder people from using descriptive rather than imperative features. In the long run, I think the effect will delay our understanding of basic similarities, which underlie different sorts of programs and different ways of solving problems.

Smith: As I understand the declarative languages, there has to be a mixture of imperative and descriptive statements or no computation will take place. If I give you a set of simultaneous equations, you may say "yes?", meaning well, what am I supposed to do about it, or you may say "yes", meaning yes I understand, but you don't do anything until I say "now find the values of the variables." In fact, in a well-developed language there is not just one question that I can ask but a number of questions. So, in effect, the declarative statements are like data which you set aside to be used later after I give you the imperatives, of which I had a choice, which get the action.

Strachey: This is a major point of confusion. There are two ideas here and I think we should try to sort them out. If you give a quadratic equation to a machine and then say "print the value of x", this is not the sort of language that I call a DL. I regard it as an implicit language--that is, one where you give the machine the data and then hope that it will be smart enough to solve the problem for you. It is very different from a language such as LisP, where you define a function explicitly and have only one imperative. which says "evaluate this expression and print the result."

Abrahams: I've clone a fair amount of programming in LISP, and there is one situation which I feel is symptomatic of the times when you really do want an imperative language. It is a situation that arises if you are planning to do programming in pure Lisp and you find that your functions accumulate a large number of arguments. This often happens when you have a number of variables and you are actually going through a process and at each stage of the process you want to change the state of the world a little bit-- say, to change one of these variables. So you have the choice of either trying to communicate them all, or trying to do some sort of essentially imperative action that changes one of them. If you try to list all of the transitions from state to state and incorporate them into one function, you'll find that this is not really a very natural kind of function because the natures of the transitions are too different.

Landin: I said in iny talk that LisP had not gone quite all the way and I think that this difficulty is connected with going all the way. If we write a function definition where the right-hand side is a listing of expressions such as

F(x) = E1 , E2, E~

thel~ we can say that this function will produce a three-list as its result. If llOW we have ~mother function G(x, y, z) = E, on some occasion we might have an expression such as G(a 2, b 2, c ~) and we often feel that we should be able to write G(F(t)), and another example which should be allowed is

G(a > b --~ E1 , E2 , E3 else E4 , E5 , E6).

l am not quite sure but I think you can get around your problem by treating every function as if it were in fact monadic and had a single argument which was the list structure you are trying to process.

Abrahams: This is a difficulty in other programming languages too; you cannot define a function of an indefinite number of arguments.

Naur: I still don't understand this distinction about an implicit language. Does it mean that whenever you have such a language there is a built-in feature for solving equations?

Abrahams: I think the point is whether you are concerned with the problem or are concerned with the method of solution of the problem.

Ingerman: I suggest that in the situation where you have specified everything that you want to know, though the exact sequence in which you evoke the various operations to cause the solution is left unspecified, then you have something which is effectively a descriptive language; if you have exactly the same pieces of information, surrounded with promises that you will do this and then this, then you have an imperative language. The significant point is that it is not all or nothing but there is a scale and while it is probably pretty simple to go all the way with imperatives, the chances of being ttble to get all the way descriptive is about zero, but there is a settle and we should recognize this scale. Smilh: I think that there is a confusion between implicit or explicit on the one hand and imperative or declarative on the other. These are two separate distinctions and can occur in all combinations. For illstance, an analog computer handles ilnplicit declaratives.

Young: I think it is fairly obvious that you've got to have the ability for sequencing imperatives in any sort of practical language. There are many, many cases in which only a certain sequence of operations will produce the logically correct results. So that we cannot have a purely declarative language, we must have a general purpose one. A possible definition of a declarative language is one in which I can make the statements (a), (b), (c) and (d) and indicate whether I mean these to be taken as a sequence or as a set; that is, must they be performed in a particular order or do I merely mean that so long as they are all performed, they may be performed in any sequence at any time and whenever convenient for efficiency.

Strachey: You can, in fact, impose an ordering on a language which doesn't have the sequencing of commands by nesting the functional applications.

Landin: The point is that when you compound functional expressions you are imposing a partial ordering, and when you decompose this into commands you are unnecessarily giving a lot of inforination about sequencing.

Strachey: One inconvenient thing about a purely imperative language is that you have to specify far too much sequencing. For example, if you wish to do a matrix multiplication, you have to do n a multiplications. If you write an ordinary program to do this, you have to specify the exact sequence which they are all to be done. Actually, it doesn't matter in what order you do the multiplications so long as you add them togcther in the right groups. Thus the ordinary sort of imperative language imposes much too much sequencing, which makes it very difficult to rearrange if you want to make things more efficient. -

Expression and Data

So functional programming is about expressions instead of statements. As such it is interesting to note that expressions are also how you "express" your data model. There was an interesting example of that in Haskell Cafe's mailing list:

A question was asked as: "

Why Either = Left | Right instead of something like Result = Success | Failure" This was interesting in the answers:Either is a generic sum type. That is, "Either A B" only means "either you have an A, or you have a B". Use of Left to represent failure is merely a matter of convention. Similarly, the generic product type in Haskell is the 2-tuple--"(A, B)" only means "you have both an A and a B".

-

Monadic Web design in future of Cloud Computing?

In all discussions around cloud computing, at least to me, it seemsthat there are too much attention to low level detail and not enough on the bigger more abstract picture.

I think this presentation describes an interesting alternative to the conventional thinking on what cloud computing will, or at least should be. (

Even though the presentation talks about search, there may be bigger point in the approach to the problem.

In the presentation the speaker shows that an application can be broken down into various well known notions. I would think map/reduce would be one such notion. But there are other notions such as non determinism, back tracking, .... that an application may be based on.

In context of cloud, looking at it in abstract, if the underlying computational notions are cloud-aware/capable, or at least well understood as a cloud component, then application written on them can easily run in a cloud just as simply it can run on a single machine. The notions can be made to hide all the plumbing and make the application cloud agnostic. The big change from the existing imperative programming is a re-evaluation of the application along functional model to separate the problem domain logic from the notions. In the new model the application can be made to run in the cloud by only changing the underlying notions. This is exactly what happened when people redid their application to run in the cloud as Map/Reduce. But Map/Reduce can't be the only notion that can be made into a cloud. To find other one needs to go back and study the notions of computation in more abstract terms and implement them as cloud. The presentation shows examples of such an approach in context of sophisticated search queries. -

Conceptual Tool

In Wikipedia Domain Specific Language is defined as:

a programming language or specification

language dedicated to a particular problem

domain, a particular problem representation

technique, and/or a particular solution technique

I have been looking for a better "frame", I may have found one.

I was looking at A domain-specific language for experimental

game theory paper. And this Tutorial in game theory had a sentence that best captured the "DSL think" that I am looking for. On 2nd slide it says:"To analyze strategic behavior we need the conceptual tool of game theory..."

A DSL is essentially allows you to apply the conceptual tools to your domain. The tools in turn need the underlying data in format that is also covered in your DSL. -

Functional Programming Think...FP is a category!

In many of the articles/books on Category Theory the Category theory is explained in terms of mathematical concepts of Rings, Sets, Fields... I have been struggling to see how it all relate to functional programming. So this might be useful for others too...

In section 2.2 this Category Theory lecture notes there is an interesting explanation of explaning how functional programming forms a category. -

A metaphor for categories and mappings

I was trying to see if Design Pattern concepts (specially compositional patterns) have been explained in terms of functors, monads,.... I ran into this post about category theory that is enlightening...

A metaphor for categories and mappings

This section in particular:For a really simple, real world example, consider a lawnmower. When out of gas/petrol, it needs to be refueled. The category of empty lawnmowers has an arrow/mapping/morphism to the category of full lawnmowers. Here the focus is more on the _process_ of mapping one category into another, or mapping one collection of things into another collection.

It's been said that category theory places mappings/arrows on a fully-equal footing with objects/points/things.

Now back to the lawnmower example. A child walks out to watch his father mowing the lawn. The lawmower runs out of gas. The child says "Daddy, I think the lawnmower needs a drink."

What the child is doing is using his own set of mappings, from thirsty to not thirsty, to metaphorize the process of fueling the lawnmower. There's a mapping between these mappings, a functor. (I happen to think this categorical way of thinking is immensely important for how we understand the world, how we might program artificial intelligences, and so on. Even more than "design patterns," I think we and other creatures chunk the world into pieces we can understand by finding the mappings and functors and so on which allow us to grok the world. We are, I think, category theory engines.)

Also this in the commentsA more useful way to think about category theory compared to set theory is to view it as a kind of inversion of reasoning. In set theory everything is built up, and we understand objects by seeing what they are made of -- picking apart their internals. We relate two objects by comparing what they are made of. Category theory turns that on its head and takes relationships between objects as primitive. From CT's point of view objects are opaque; we understand an object not by peeking inside at it's internal structure, as we would if we were using set theoretic thinking, but rather by examining how the object relates to other objects.

-

Functional Programming Think

It seems that as I am learning more Haskell, I find more nuggets in paper that I had read before. For instance, John Hughes Generalising Monads to Arrows paper has some very interesting insight into functional programming mind set.If this were an isolated case we might simply ignore it. But Swierstra and Duponcheel’s idea is clearly much more widely applicable: to optimise a combinator library, rede ne the combinators to collect static properties of the computations they construct, and then use those static properties to optimise the dynamic computations. If we think of a library as de ning a domain speci c ‘language’, whose constructions are represented as combinators, then Swierstra and Duponcheel’s idea is to implement the language via a combination of a static analysis and an optimised dynamic semantics. We may clearly wish to do this very often indeed. But every time we do, the type of >>= will make it impossible to use a monadic interface!

And also on Category Theory3.1 On Category Theory

Before we do so, we make a short digression on the subject of category theory. The concept of a monad was developed by category theorists long before it eventually found an application in functional programming. Some might find it surprising that something so abstract as category theory should turn out to be useful for something so concrete as programming. After all, category theory is, in a sense, so abstract as to be rather unsatisfying: it is ‘all definitions and no theorems’ , almost everything turns out to be a category if you look at it long enough, to say something is a category is actually to say very little about it. The same is true of most categorical concepts: they have very many possible instantiations, and so to say that something is, for example, a monad, is to say very little. This extreme generality is one reason why it is hard for the beginner to develop good intuitions about category theory, but it is hardly surprising: category theory was, after all, developed to be a ‘theory of everything’, a framework into which very many di erent mathematical structures would t. But why should a theory so abstract be of any use for programming? The answer is simple: as computer scientists, we value abstraction! When we design the interface to a software component, we want it to reveal as little as possible about the implementation [think algebra vs calculus]. We want to be able to replace the implementation with many alternatives, many other ‘instances’ of the same ‘concept’. When we design a generic interface to many program libraries, it is even more important that the interface we choose have a wide variety of implementations. It is the very generality of the monad concept which we value so highly, it is because category theory is so abstract that its concepts are so useful for programming.

It is hardly surprising, then, that the generalisation of monads that we present below also has a close connection to category theory. But we stress that our purpose is very practical: it is not to ‘implement category theory’, it is to find a more general way to structure combinator libraries It is simply our good fortune that mathematicians have already done much of the work for us. -

Class types as "convenience functions"

I had hard time understanding the common practice in haskell of MonadXXX class relationship with XXX type (e.g. MonadReader and Reader) until I run into this line:

The MonadWriter class provides a number of convenience functions for working with Writer monads. In the Writer Monad tutorial.

Looking at the class interfaces as "convenience functions" to work with underlying type is interesting way of looking at it. -

What does Semnatic Design mean?

An excellent post on concept of Semantic Design -

Advanced Functional Programming, Lecture Notes

CSE581 - Advanced Functional Programming, Lecture Notes -

What is is that makes camp and darcs unique, and worth us spending time on

-

The best description of Functional Programming

The posting for PhD student position in Ultrech University in Holland seem to have the best description of functional programming I have seen:Lambda-calculus and term rewriting are models of computation lying at the basis of functional programming languages. Both possess syntactic meta-theories based on analyzing rewrite steps.

Source -

It is all about composition....

Here is an interesting talk about the power of composition applied to UI world:

Tangible Functional Programming: a modern marriage of usability and composability

Update: Conal's blog has been moved, I updated the link to the new address. -

Java -> Erlang core + Haskell types

From Joe Armstrong, creator of Erlang...

Source

An interesting interview with Joe Armstrong -

Your design depends on the glue you have...

The Post-it notes is an interesting case where a glue type created a whole set of products and even programming tutorial!!!

In paper Why Functional Programming Matters, John Huges, has interesting observations:

It is now generally accepted that modular design is the key to successful programming, .... However, there is a very important point that is often missed. When writing a modular program to solve a problem, one first divides the problem into subproblems, then solves the sub-problems and combines the solutions. The ways in which one can divide up the original problem depend directly on the ways in which one can glue solutions together. Therefore, to increase ones ability to modularise a problem conceptually, one must provide new kinds of glue in the programming language. Complicated scope rules and provision for separate compilation only help with clerical details; they offer no new conceptual tools decomposing problems.

One can appreciate the importance of glue by an analogy with carpentry. A chair can be made quite easily by making the parts - seat, legs, back etc. - and sticking them together in the right way. But this depends on an ability to make joints and wood glue. Lacking that ability, the only way to make a chair is to carve it in one piece out of a solid block of wood, a much harder task. This example demonstrates both the enormous power of modularisation and the importance of having the right glue.

He says:

To assist modular programming, a language must provide good glue. Functional programming languages provide two new kinds of glue - higher-order functions and lazy evaluation.

On higher-order functions he says:

.....functional languages allow functions which are indivisible in conventional programming languages to be expressed as a combination of parts - a general higher order function and some particular specialising functions. Once defined, such higher order functions allow many operations to be programmed very easily. Whenever a new datatype is defined higher order functions should be written for processing it. This makes manipulating the datatype easy, and also localises knowledge about the details of its representation. The best analogy with conventional programming is with extensible languages - it is as though the programming language can be extended with new control structures whenever desired.

On Lazy evaluation he says:

The other new kind of glue that functional languages provide enables whole programs to be glued together. Recall that a complete functional program is just a function from its input to its output. If f and g are such programs, then (g . f ) is a program which, when applied to its input, computes

g (f input)

The program f computes its output which is used as the input to program g. This might be implemented conventionally by storing the output from f in a temporary file. The problem with this is that the temporary file might occupy so much memory that it is impractical to glue the programs together in this way. Functional languages provide a solution to this problem. The two programs f and g are run together in strict synchronisation. F is only started once g tries to read some input, and only runs for long enough to deliver the output g is trying to read. Then f is suspended and g is run until it tries to read another input. As an added bonus, if g terminates without reading all of f ’s output then f is aborted. F can even be a non-terminating program, producing an infinite amount of output, since it will be terminated forcibly as soon as g is finished. This allows termination conditions to be separated from loop bodies - a powerful modularisation.

Since this method of evaluation runs f as little as possible, it is called “lazy evaluation”. It makes it practical to modularise a program as a generator which constructs a large number of possible answers, and a selector which chooses the appropriate one. While some other systems allow programs to be run together in this manner, only functional languages use lazy evaluation uniformly for every function call, allowing any part of a program to be modularised in this way. Lazy evaluation is perhaps the most powerful tool for modularisation in the functional programmer’s repertoire.

You can read the whole paper: Why Functional Programming Matters -

pure, side effect, types, and Computation....

A friend (a purist one!) was arguing that a purely functional programming paradigm is not useful in real life. His point was that a pure functional language means no side effect. Without side effect there is not much a software can do. Simon Peyton Jones, one of the gurus of the Haskell, had an interesting comment in that a computer program that has no side effect is essentially a heater! As it generates heat and no real useful work.

So what is the disconnect? It has to do with a type systems and the scope of your types (essentially what are you typing in your system?)

Lets start from Assembly programming. In an assembly language program any instruction can read/write from/to any address in its space. To understand the code (as in debugging it) you need to go line by line and make sure you understand the side effects in each instruction.

High-level languages improved on this. For instance in a C program (or Java), you know the types of the input and output to/from your function (or method). The key point to realize is that the type of input/output doesn't say anything as to what the method will (or will not) cause when it is invoked. For instance, if a method takes two integer and returns another integer (as in add), you don't know if the invocation of the method would create any side effects on the file system. The best you can do is to look at the program's (or Class) include (or imports). For instance a class that doesn't import the file system classes (directly or indirectly) then its methods would not have any side effects or dependency in the file system.

Then comes Dependency Injection to solve the problem in Object oriented model. Dependency Injection allowed you to abstract out your object's external dependencies into an interface that would get injected at run time into the class.. It is an improvement on the base Object Oriented model. But on a given method invocation, you still didn't know what would happen. At least there is nothing in the compiler that you can count on to ensure the dependencies. So for example, a call to a method1 on a class can influence a subsequent call to method2 of the class. You would not have any idea about such backdoor connections. With good programing practices you can get by. But when you are thinking about testing, reuse or sharing of code this kind of issues always creep in.

Clearly some languages do away with types all together. But here we are talking about cases where (for whatever reason) you want to have a type system. What is interesting is that in languages that do have type system, the types of the input and output is not enough to be able to make sense of the code (as in maintain it, share it, or write unit tests for it, etc).

If instead of typing the input/out you actually type the computation then you can get lot out of your typing system. Take for instance the saying: life is not a destination, it is journey. In C/Java/etc life is a destination. All you care about is the type of the returned data. In typing the computation it is all about the journey. But how is that different?

Let say your kids are going on a summer trip with their school. What is important to you? Do you just care that they reach their destination or are you also concern about the type of activities that they would experience along the way? For instance, you want to know if they serve alcohol in the trip or not. If kids are going on the trip, then may be you want to find alternatives. If adults are going for the spring break, well that may be the thing you are looking for.

The key is that you don't want to forbid or encourage alcohol consumption (side effects) just that you want to make sure the type of the journey (the computation) matches your requirements as in destination AND journey. You want to know all the effects and not just the return value. After all, if you want a type system, why should you just stop at the return values?

In Haskell, the type of the function is not just the return value, it is the type of computation (journey). That is the key concept. Of course the type of the return value would also be included in the type of computation. Furthermore, there are cleaver ways to take a computation and define as abstract type (similar to template programming C++ or Generics in Java) where the actual type of the return value depends on the invocation of the computation (based on other parameters). If there are any side effects it would also show up on your type system. You have full disclosure, or specification. Modeling IO is an interesting example of using type system to fully define computations with side effect. The IO Inside article might be a good start to get more details.

To sum it up, in a pure functional language you don't want to avoid side effects. You just want to know the computational models that do generate side effect and use them accordingly. The beauty of it is that the compiler ensures that a computation would not violate its computational contract.

Knowing the computation's type (as oppose to just its return value) is quite beneficial. It like the dependency injected but at function level. Hence, the type signature can be used to perform automatic testing of the functions. Furthermore, the caller can control the various aspect of the computation. For instance, lets say the computation signature indicates that the computation generates log messages. The caller can call the function with its own logging implementation in the computation, which sounds awfully like Aspects programming. -

Make friends with Visitors

A good starting point in understanding functional programming is to understand the difference between Visitor and Strategy patterns. Strategy pattern is what almost all OO programmers are familiar with. You have your graphic objects with their draw methods that faithfully draw the object. From outside the caller has no idea about the underlying implementation. In this model it is easy to add new objects to the system. You can add new shapes, just as long as they implement draw method. The pattern has a weakness in the sense that if you want to add a new function then it would be difficult. Let say we need to add a method to calculate the area of a shape, now every object has to be modified to implement the new method. So you in Strategy pattern you are fixing your class interface but let your data be extensible.

Visitor pattern is also an OO pattern but most programmers are not as familiar with it. The pattern is interesting in the sense that unlike the strategy pattern you fix your classes (say only deal with certain shapes) but let actions be extensible. In visitor pattern to add a new requirement like calculating perimeter would be easy but adding a new shape would be difficult. Assigning a new shape will require change to all the existing actions. The most successful visitor pattern implementation are when you work with a specification where your data is defined but your actions are extensible. Say for instance you want to parse XML tree. The specification of the tree structure is defined but what the application does with the tree is left for the application.

You may think to yourself that if I fix my data types then I would not easily be able to extend my system. The key is that your data type should not just include primitive types. It would need to include primitives to modify and compose basic primitives into more complex structure (like tree).

Peter Seibel does a great job discussing the visitor pattern and its implementation in Java vs Lisp. The ideas presented in the video is not lisp specific, but rather a different way of thinking about a problem. Video of his presentation is available online. Kudos to Peter for his no-power-point presentation. -

What do you measure...

In questing Gross Domestic Product(GDP) measurements as valid health economic indicator, Martin Collier of the Glaser Progress Foundation says:The most simple way to put it is you are what you measure, you get what you measure, and you fix what you measure. It's time to measure what matters most, it's time to measure what we value most, instead of simply valuing what we measure. Source

Interesting...You {are, get, fix} what you measure. -

How little software has changed...

It is amazing how much of Computer Science was done way before it became as popular as it is today. It is also amazing how little it has progressed (at least as is practiced by professionals) since the old days. Back in August of 1978 John Backus (The B in BNF and inventor of FORTRAN) had this to say:Programming languages appear to be in trouble. Each successive language incorporates, with a little cleaning up, all the features of its predecessors plus a few more. Some languages have manuals exceeding 500 pages; others cram a complex description into shorter manuals by using dense formalisms. The Department of Defense has current plans for a committee-designed language standard that could require a manual as long as 1,000 pages. Each new language claims new and fashionable features, such as strong typing or structured control statements, but the plain fact is that few languages make programming sufficiently cheaper or more reliable to justify the cost of producing and learning to use them.

Since large increases in size bring only small increases in power, smaller, more elegant languages such as Pascal continue to be popular. But there is a desperate need for a powerful methodology to help us think about programs and no conventional language even begins to meet that need. In fact, conventional languages create

unnecessary confusion in the way we think about programs.

For twenty years programming languages have been steadily progressing toward their present condition of obesity; as a result, the study and invention of programming languages has lost much of its excitement. Instead, it is now the province of those who prefer to work with thick compendia of details rather than wrestle with new

ideas. Discussions about programming languages often resemble medieval debates about the number of angels that can dance on the head of a pin instead of exciting

contests between fundamentally differing concepts.